The landscape of AI agents is rapidly expanding. We’re not just talking about chatbots or simple automation tools—this is an entirely new category of digital entities that operate across different domains, interact with systems dynamically, and increasingly take on responsibilities that were once exclusively human. But not all AI agents are created equal. To effectively govern and leverage them, we need to understand the different types of AI agents and why they matter.

The Three Core Types of AI Agents

Broadly speaking, AI agents fall into three categories:

1. Company AI Agents

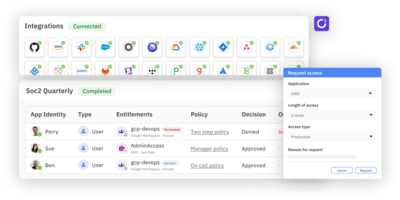

These are system-wide agents embedded within an organization’s core business applications that can act on behalf of the organization. Think of a Salesforce AI agent that proactively checks leads or a GitHub AI agent that reviews code. These agents function much like traditional service accounts but with far greater intelligence and autonomy.

Why They Matter:

- Act like service accounts and execute workflows.

- Operate within app-centric with clear boundaries and permissions.

- Introduce a multiplier effect—each SaaS tool may have its own agent.

- Pose risks if over-privileged, potentially causing massive automation failures.

2. Employee AI Agents

Employee AI agents act on behalf of the user and exist to enhance individual productivity. Unlike company AI agents, which are tied to a specific app, employee AI agents work across multiple tools on behalf of the user. They help write emails, synthesize reports, automate tasks, and even retrieve relevant data from disparate systems.

Why They Matter:

- Increase employee efficiency by automating repetitive tasks.

- Inherit user permissions, which introduces security challenges.

- Require a new approach to access control—users need to manage which permissions their agents have.

3. Agent-to-Agent Interactions

Agent-to-agent interactions are the most complex and least understood category. This involves AI agents communicating and making decisions amongst themselves. Imagine an AI agent in a finance system talking to an AI agent in a CRM system to validate a contract’s terms before executing a payment.

Why They Matter:

- Enable true machine-driven automation without human bottlenecks.

- Raise significant security and compliance concerns—who audits the decisions these agents make?

- Introduce complex data flow and authorization challenges, as multiple agents work together dynamically.

The Emerging Security and Identity Problem

Each of these AI agent types introduces new identity and security risks. Employee AI agents, for example, often need a subset of their user’s permissions, but without a clear way to define “sub-user” access, they may inherit too much. Agent-to-agent interactions introduce trust issues—how do we verify that an agent has the appropriate permissions to request data from another?

Preparing for the Future of AI Agents

Organizations need to rethink identity and access management to accommodate these new AI actors. Traditional security models assume relatively static identities with long lifespans, but AI agents are ephemeral, dynamic, and constantly evolving.

What’s needed?

- New authorization frameworks that allow granular, task-specific permissions.

- AI-native identity governance to track agent identities and their activities.

- Automated policy enforcement to prevent unauthorized data access and privilege escalation.

The AI landscape is diverging rapidly, and organizations that don’t adapt will struggle to maintain control. Understanding the different types of AI agents and their implications is the first step toward navigating the era of enterprise AI.